Cracking the AI Enigma: Anthropic Researchers Play Technological Hide and Seek in the “Black Box”

“Decoding the AI mind: Anthropic researchers peer inside the “black box””

“According to an exciting new paper from Anthropic, artificial intelligence (AI) can be approximately understood and optimized by methods as ‘simple’ as gradient descent. Think about that for a moment… gradient descent. Yeah, I bet you forgot all about that bad boy, didn’t you?”

Well, for those of you who just hopped off Google in a understandable panic, gradient descent is just a super-trendy way of saying how we optimize the parameters of a mathematical model (AI in this context).

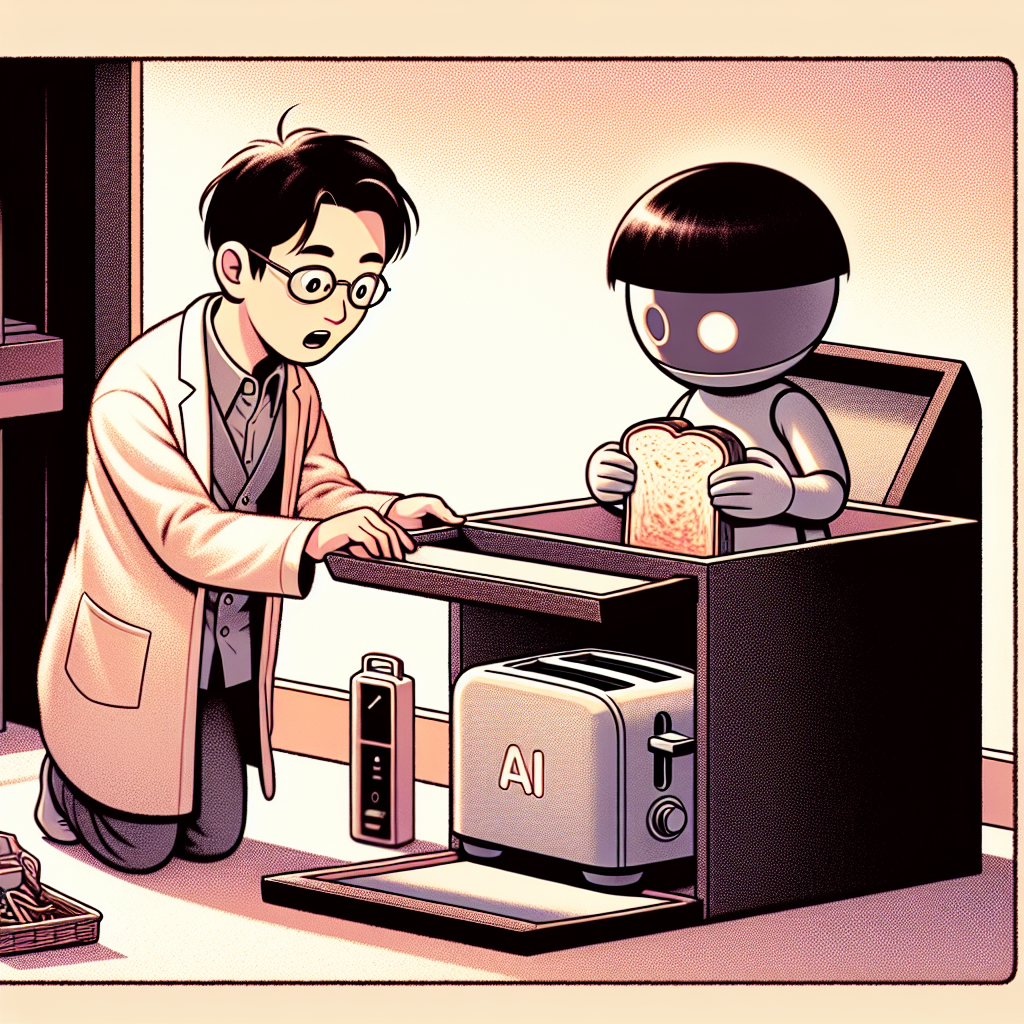

Anyways, Anthropic, a research company looking to make AI comprehensible to humans, just published a paper that sort of reads like the Pythagorean theorem of AI. They declare that AI isn’t an impenetrable, overly complex black box, rather it’s fairly uncomplicated, sort of like your toaster, but a tad more exciting – kind of.

Now, as much as it’s fun to feed bread slices into your toaster and watch them pop up perfectly golden (or in some cases transformed into briquettes, ready to join their ancestors in the great forest of Charcoal), AI has significantly more exciting implications – if they can be trusted, that is. Here, Anthropic has a goal: they want AI to be understandable, and hence, more trustworthy. Fair game, I’d say.

With Anthropic’s reasoning, the black box is becoming more like a smoky-grey box, and soon enough, we might flip open that lid and understand what we’re looking at. You got to appreciate their thoughtfulness though. Like, thanks for enthusiastic researchers who want to make sure there’s no artificial-intelligence-robopocalypse.

In the grand scheme of things, “anthropic” means “pertaining to humans or humanity.” Who knows, maybe these eggheads are getting closer to cracking this cryptic code of artificial intelligence? Now, if only they could tackle something truly challenging, like figuring out why socks disappear in the dryer. But one step at a time, we guess.

Though if you’re into math, then the paper’s filled with all those squiggly symbols you love so much. It’s no easy read, but hey, that’s why we have coffee and energy drinks.

So, there it is. The veil on that daunting ‘black box’ AI is slowly being lifted. The ironic thing is that AI was supposed to make our lives easier and now we’re bending over backwards trying to understand this supposed simplicity. But with companies like Anthropic on the case, maybe, just maybe, this tricky paradox will be understood. After all, clarity is always the first step to trust. Especially when we’re talking about potential toaster overlords.