The Humorous Side of AI’s Struggle to Accurately Depict Kamala Harris

“Why AI Is So Bad at Generating Images of Kamala Harris”

“The face of the vice presidential candidate floated into view online, slightly obscured by a rush of digital artifacts. A deep learning algorithm, trained on tens of thousands of images, had drawn Kamala Harris, pixel by pixel, over the course of several hours.” Quite an intro, wouldn’t you agree?

Now, as wonderful as this all sounds, let’s talk about the potential underbelly of all the fun. So, apparently the image of Ms. Harris was created by artificial intelligence using a deep learning model. Fascinating! However, the question everyone seems to be conveniently skirting around is, “Are AI-generated visuals going to aid in the proliferation of deepfake technology?” Genuinely, there could be a problem lurking ahead that we are not fully recognizing or in this case, visualizing.

There’s a specific part of the piece where it says, “Artists and academics worry it might be used to create deepfakes, too.” Now, isn’t that just a thought worth pondering? Let’s briefly explain what deepfakes are, for those of you trying to catch up. Deepfakes are synthetic media where a person’s image or voice is replaced with someone else’s. Now, imagine the chaos and misinformation that can result from this. Imagining yet?

Hilariously enough, some people are treating this as a breakthrough in technology. Sure, it is an impressive feat, but at the same time, it’s like a Pandora’s Box waiting to blast open; Once opened, it will not be easy to contain. This is not to say that this technology should be shunned entirely, but it definitely warrants some more thought and regulation.

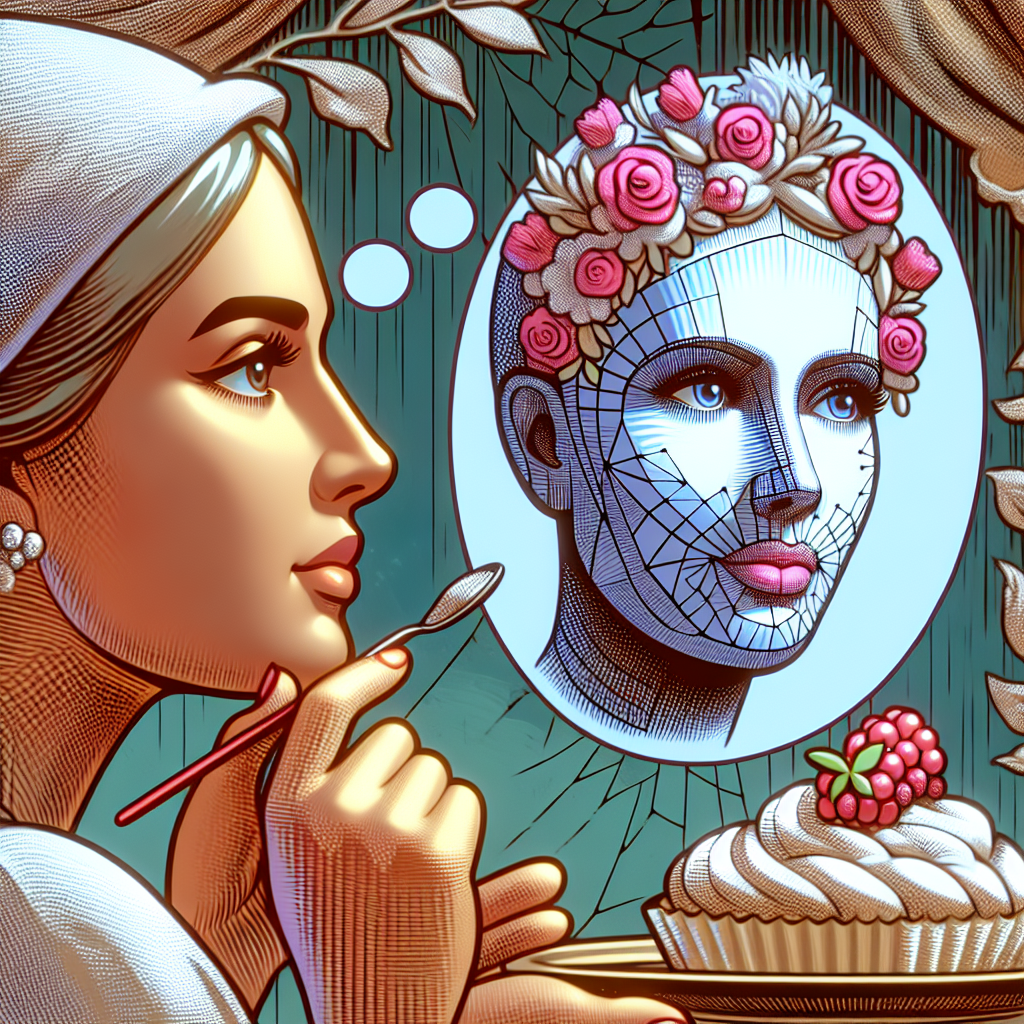

What’s even more interesting is the discussion surrounding how this technology might be exploited, is somehow being overshadowed by the marvel of its artistry. It’s like applauding a beautifully decorated cake while ignoring that the ingredients might be harmful. Sure, the AI’s detailed portraits are captivating and mesmerizing, but what happens when they start making an authentic-looking Hillary or Bernie or Trump?

Finally, consider what’s mentioned towards the end of the article, “…manipulating existing images is becoming less of a hassle. Translation: More convenient ways to potentially generate misinformation.” Pardon the skepticism, but would it kill us to have more responsible technological advancement?

Remember, deceptive misrepresentations created by deep learning algorithms are potentially no less damaging than those created by other means. To sum up, while let’s applaud the advancements, let’s also not forget to address and mitigate the risks associated with AI-generated images.

One cannot help but think that just because something can be done doesn’t necessarily mean it should be done. Now that’s a pixel to ponder.

Read the original article here: https://www.wired.com/story/bad-kamala-harris-ai-generated-images/