“Georgia State University Study Proclaims: AI Edges Out Humans in the Ethical Judgment Olympics!”

“AI outperforms humans in moral judgments, says Georgia State University study”

“Researchers at Georgia State University claim a machine learning model, trained on over a million human responses, would make decisions similar to how humans make them in morally complex scenarios.” There you have it, folks, AI is now superior not only in chess and Go, but also in maintaining the moral high ground.

So, humans, brace yourself. You’ve just been outwitted by your digital counterparts in the high-stakes game of ethical dilemmas. No one can evade AI’s relentless grip, not even philosophers with their intricate moral theories. Oh, the dystopia!

Remember the good old thought experiments in philosophy classes, pondering our way through tricky ethical dilemmata? Welcome to the age of the artificial moral compass. The Georgia State University study discovered that our beloved algorithms can navigate the hazy realm of right and wrong. Moral decision-making, previously a human stronghold, is being invaded—with a wealth of data, of course.

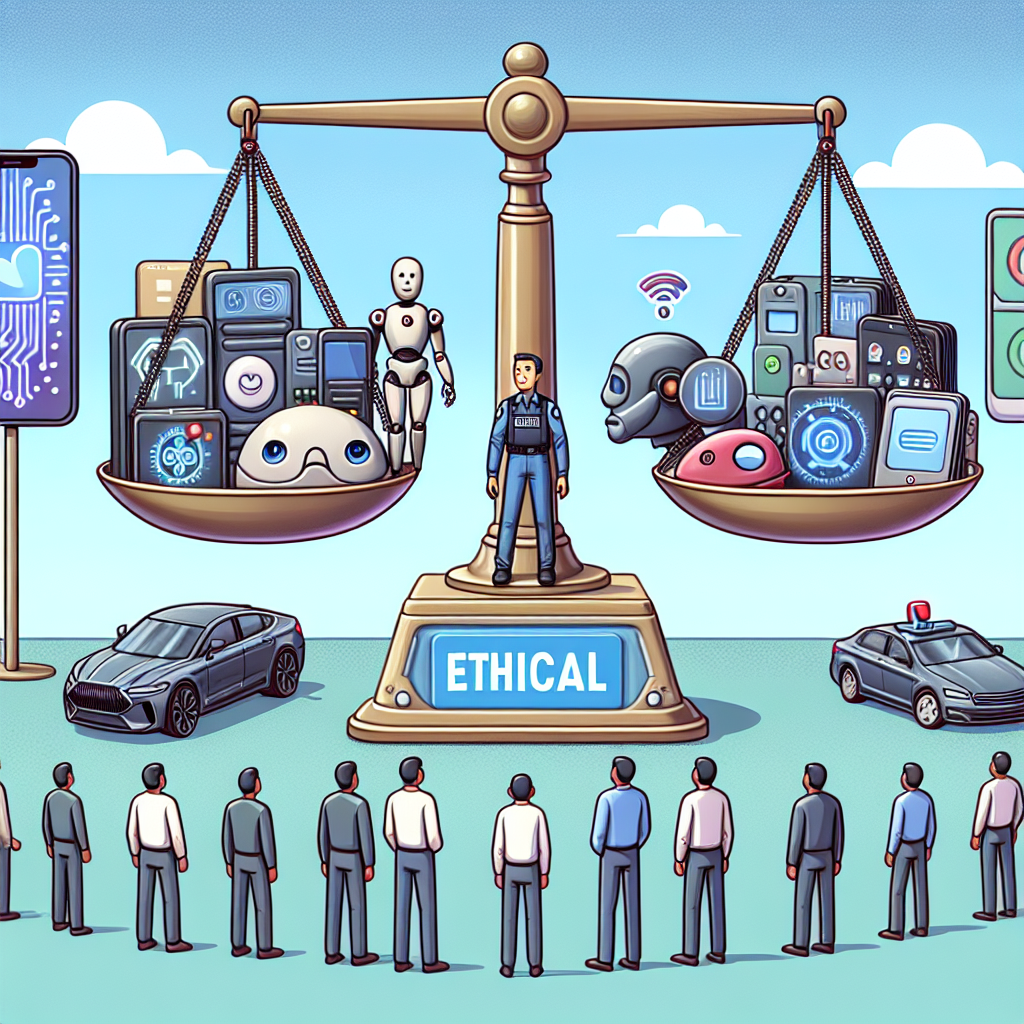

The widespread impact of this research is astounding. What was once the domain of ethics—a human-centric field—is now an arena where artificial intelligence can make its mark. One can only imagine the potential implications in autonomous vehicles, medical machinery, and beyond. The line between ethical right and wrong has always been contentious terrain. Now it’s no man’s land with algorithms playing the referee. Isn’t it interesting?

Nevertheless, one must not forget that even the best machine learning model is a product of human intelligence. The ‘humanness’ of moral judgements comes from the million-plus human responses it was trained upon—akin to a diligent student compiling class notes for the big test. Thus, it draws heavily on precedents set by people rather than philosophizing about the nature of morality itself.

Furthermore, boasting that AI can make ‘human-like’ moral decisions seems nearly… anthropomorphic, unique in its weird sense. Would algorithms feel guilty about their decisions? Perhaps ponder over their misjudgements? Obviously not. The cognitive process involved in ethical decision-making is much more nuanced. Simply put, they still lack context.

So, while you wriggle around those tricky moral dilemmas, wondering who or what to trust, there might be an artificially intelligent bot catching up faster than you anticipate. Just remember, it doesn’t have to go through the same existential crisis you did. Spare a thought for its simplicity. Or, on the contrary, marvel at our own complexity. Who knew ethics could possibly feel so… binary?