Mitigate AI Fantasies Using This Ingenious Software Solution: No Rabbit Hole Required!

“Reduce AI Hallucinations With This Neat Software Trick”

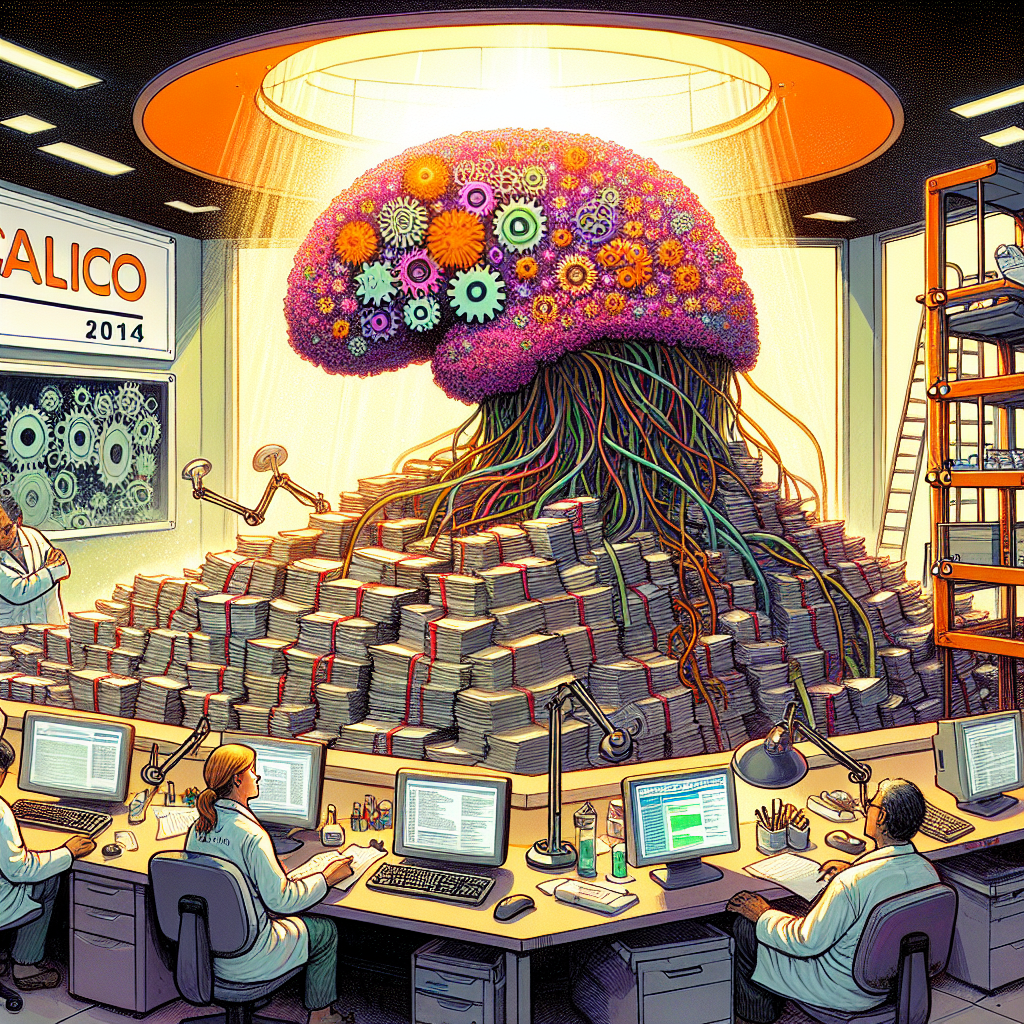

“In 2014, when researchers at the Google-sponsored biotech company Calico sought to better understand human longevity by searching for patterns in medical records and genetic data, they ran into a problem. Such massive data stores were too complex and unwieldy to investigate using traditional methods. So the researchers turned to machine learning, a burgeoning approach then not yet in vogue.”

A shout out to the crack team at Calico in 2014, the biotech company generously funded by Google. Quite the progressive thinkers, they were tackling human longevity by wrestling with mammoth amounts of medical records and genetic data. But lo and behold, they encountered a wee bit of a problem. These colossal data stores were, how should it be put, somewhat tricky to scrutinize using old-fashioned methods. Hence, they sought the assistance of machine learning, the bright spark on the horizon not fully appreciated yet.

However, let’s be clear, while machine learning takes pride in handling large data sets efficiently, it experienced its “baby’s first step” moments. Early iterations were prone to a phenomenon affectionately termed as “hallucinations.” Now, don’t go thinking our dear AI was popping psychedelic pills. No, hallucinations in this context refer to its endearing habit of spotting patterns and correlation where there were none. Adorable, right?

But sarcasm aside, let’s not forget it’s about tackling human longevity here. A slight mistake could have you focusing your funds on research that indicates watching cat videos improve life span! And that, my dear readers, might not be the best use of resources.

The team introduced a unique yet elegantly straightforward technique to manage these hallucinations. It’s called the “RAndomized Gradient” functionality, or “Rag” if you fancy acronyms. Its job? To add a pinch of randomness to help the algorithms stir clear of false patterns and keep it honest.

Does it work? Well, let’s look at the evidence. After all, the proof is always in the pudding. An application of “Rag” in DeepMind’s AlphaGo caused a noticeable reduction in hallucinations. Encouraging signs, but one swallow doesn’t make a summer. A broader adoption across projects might be necessary to comment on its effectiveness conclusively.

To sum it up, this is one fascinating piece of tech magic that will hopefully lead to significant advancements. In the world of AI, the most minor tweaks can result in substantial improvements. And if it keeps the AI from nudging you towards a lifetime of cat videos, all the better. No offense to the cat lovers out there! After all, it’s the difference between machine learning and machine enlightening.

Read the original article here: https://www.wired.com/story/reduce-ai-hallucinations-with-rag/