A Heat-Wave of AI Knowledge: The Ins and Outs of Hot Expertise and Sparsity.

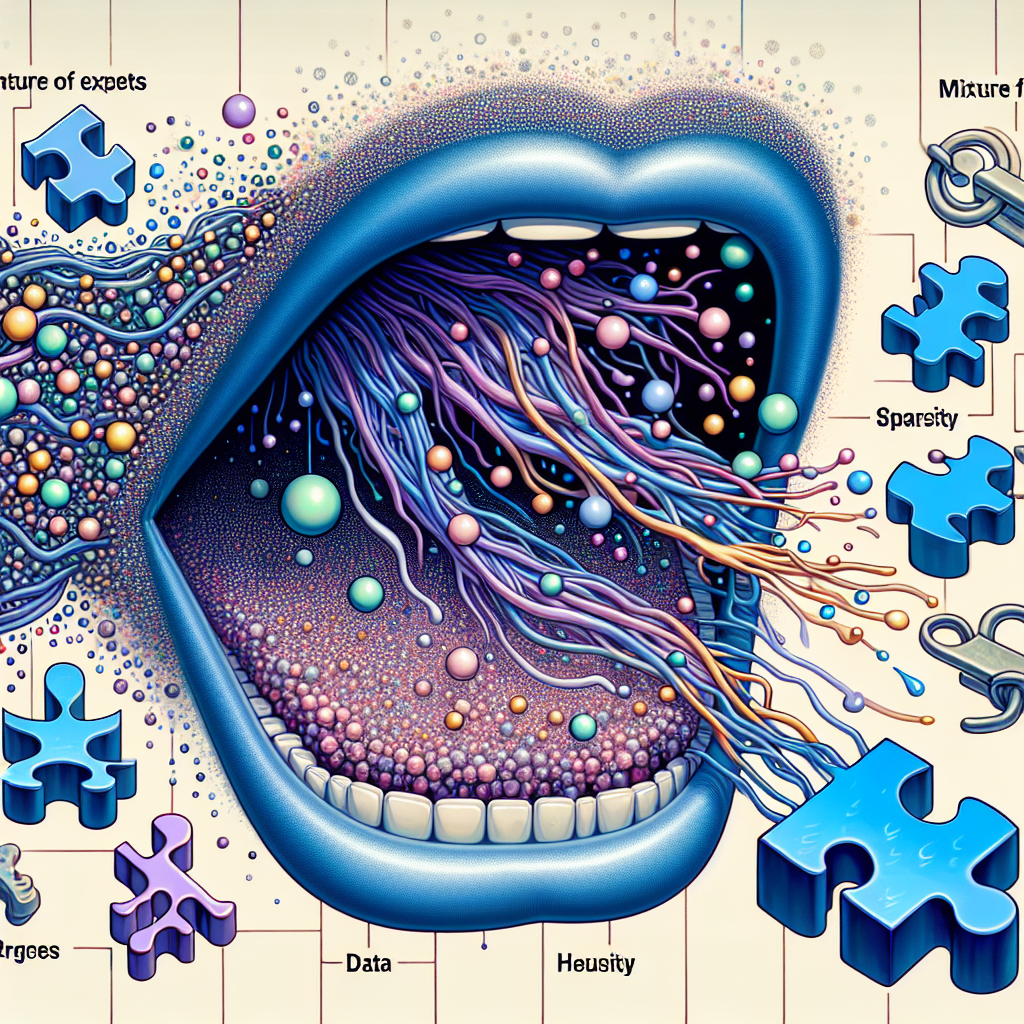

“Mixture of Experts and Sparsity – Hot AI topics explained”

“Deep learning has given us some great models that are impressive at mimicking human intelligence. Still, they also have a big weakness: they need an enormous number of examples to learn something new.”, this quote perfectly sets the stage for today’s discussion found on DailyAI, concerning hot AI topics – primarily, the concept of the Mixture of Experts and the idea of sparsity.

If there’s anything that unsettles the comfortable realm of developers dealing with deep learning models, it would be their astounding appetite for data. It is similar to the way teenagers raid the fridge – totally undiscriminating gluttony. It’s almost like the models are standing at the virtual buffet line and piling their plates sky-high, only to forget most of what they’ve “learned” by dessert.

This is where the concept of a “Mixture of Experts” swoops in to save the day, sort of like the Avengers of the AI world. Having a single model learn everything is tough. So, developers have come up with the idea of dividing the responsibility among several “expert” models instead. Each of these models becomes highly proficient in a specific area. Kinda like how a restaurant divides work among chefs – you wouldn’t want your sashimi being handled by the pizza guy, right?

However, this solution leads to another problem – managing these numerous experts can become an organizational nightmare. It’s like trying to figure out which Tupperware contains last week’s lasagna in a fridge filled to the brim. To prevent this from becoming a complete chaos, the use of a “gating network” is proposed, which decides which expert will be appropriate for a particular task, based on the inputs.

From this conundrum pivots our discussion to the concept of sparsity. Now, the human brain is a master of efficiency. It has an uncanny ability to process vast amounts of data with a sparse fraction of power. So, the question is, why can’t our models imitate the same? Incorporating sparsity into deep learning models could lead to incredible efficiencies, reducing our models’ data appetite and helping them focus on what’s actually important, as opposed to gobbling up everything in sight.

To sum it up, it’s obvious that the AI realm is in a state of evolution and flux. Mixture of Experts and sparsity are exciting research points that are garnering much attention. However, whether these new methods can overcome the intrinsic issues prevalent in existing models and elevate them to the next level remains a thrilling question. So, all eyes on the AI landscape to see how things unfold. It’s safe to say, it’s just getting started!