AI: A Love Affair Growing in The Shadows of Ignorance

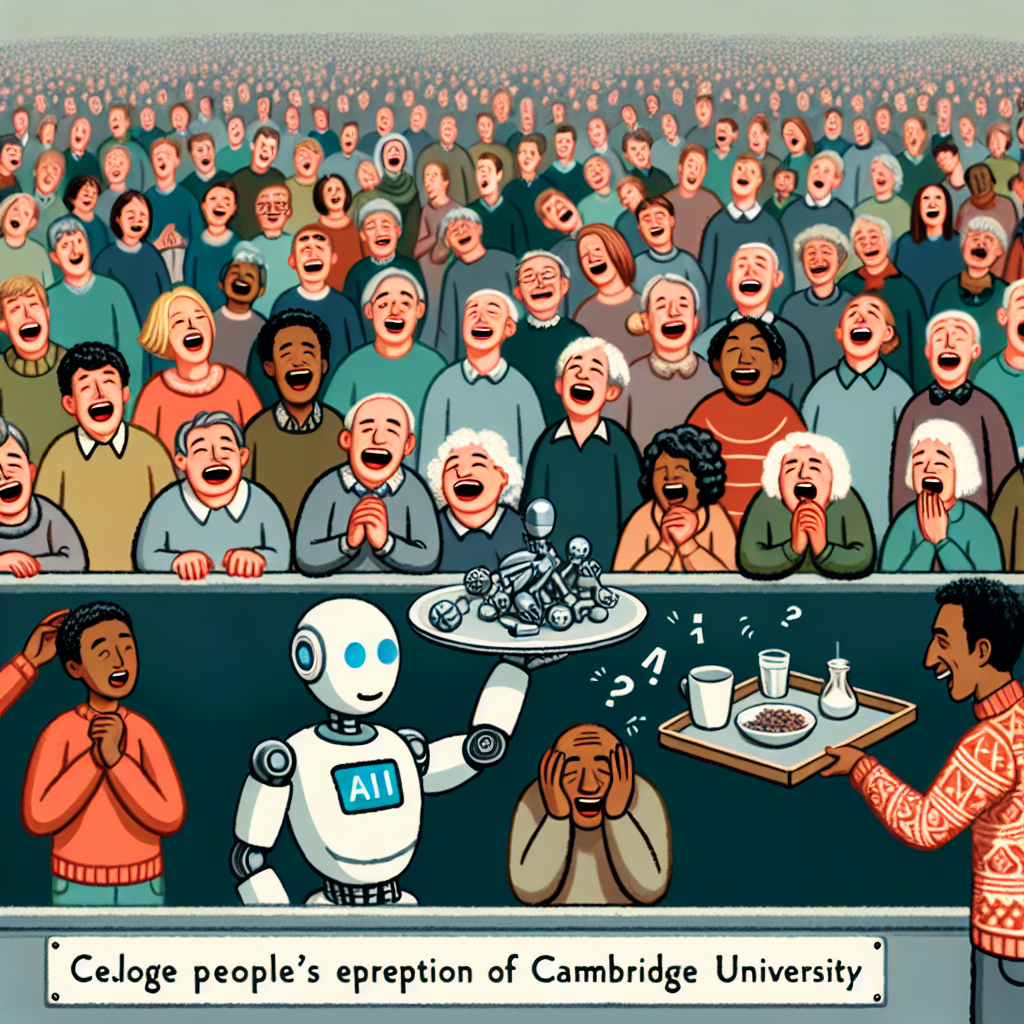

“The Less People Know About AI, the More They Like It”

“The more a person knows about how artificial intelligence works, the less they trust it. That’s the main finding of a new study at the University of Cambridge, which also discovered that the average Joe and Jane prefer an AI that makes mistakes over one that operates flawlessly.”

Believe it or not, the more people know about artificial intelligence (AI), the less they trust it. At least that’s the main revelation of a new study conducted by the University of Cambridge. In a quite ironic twist, the average Joe and Jane, it turns out, have more trust in an AI that isn’t top-notch perfect, one that, like all humans, makes a good old mistake once in a while. Yes, perfectly operating AI seems a bit too eerie for the regular folk to handle.

This state of things maps out quite a paradoxical landscape: we are living in a society increasingly dependent on AI infrastructures, yet, the more we citizens learn about them, the more skeptical we become. A society running on AI – from our cell phones to our household appliances, chatbots, recommenders and personal assistants – but one paradoxically comforted by the occasional AI blunder that assures us, everything is normal.

Sure, it’s human nature to feel uneasy with the unknown. To fear the shark in the water, the monster under the bed. But with AI, the unknown is flipped on its head. Here, knowledge doesn’t bring comfort; it brings discomfort. Alternatively, one could say that our good old friend Murphy’s law, “anything that can go wrong, will go wrong,” is our AI safety blanket. Good job, Murphy.

The implications of this trend might be considered for crafting future public policies and corporate practices regarding AI technologies. When it comes to damage control, for example, might error disclosure be the way to the average Joe and Jane’s heart? A sprinkle of occasional blunder just to assure us of true AI?

This finding offers food for thought. As AI continues to advance and grow more complex, will the knowledge-discomfort paradox continue to widen? Will it affect people’s willingness to use and accept AI technologies going forward? Only time will tell.

Until then, it seems. A spoonful of mistakes helps the AI go down. Or, to paraphrase our friend Murphy: If there’s no potential for a botch-up, don’t trust it. Now, how’s that for a paradox?