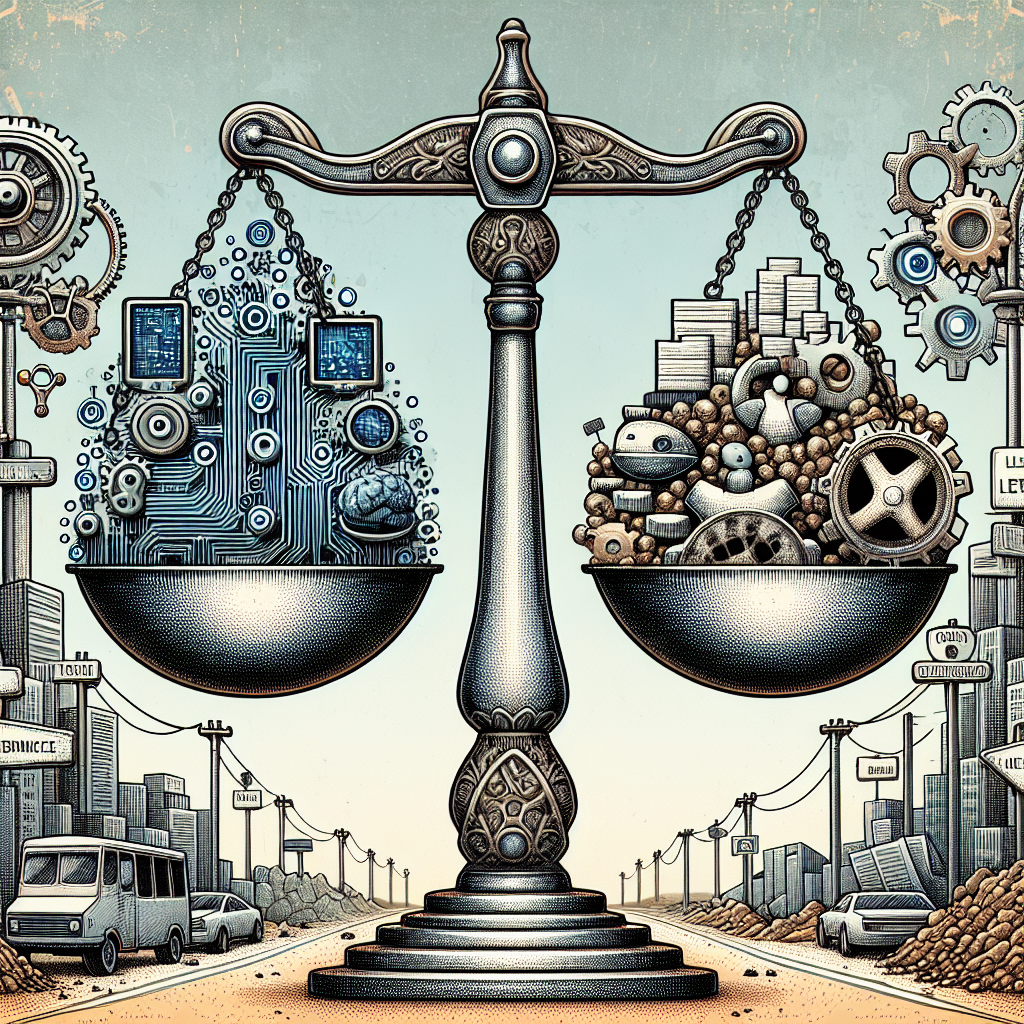

Exploring the Implications of the EU AI Act: What Are the Benefits and Pitfalls?

“The EU AI Act represented a huge step in regulating AI, but is there a cost?”

“Adopted by the EU Commission, the Artificial Intelligence Act is a pioneering set of regulations designed to manage high and low-risk applications of AI. As revolutionary as its promises to be, does it come without a price?”

Oh boy, here we go again. Another groundbreaking announcement, a new set of regulations purported to be the ‘future of technology’. Meet the EU’s new fancy child, the Artificial Intelligence Act. They hail it as the trailblazer of AI regulations, aimed at wrangling both high-risk and low-risk AI applications. But the million-dollar question, folks, is— does it come with a hidden price tag, one we’re not ready to pay?

Just when you thought tech couldn’t get any more bureaucratic, voila! Europeans have created a whole new category, “high-risk AI systems.” Systems that will potentially lead to harm, injury or, even better, pose a threat to people’s fundamental rights. And the cherry on top? These high-risk applications will be checked for compliance before they even hit the market.

Hold on to your seats, venerable colleagues in the tech industry; it’s not just the high-risk AIs that need to meet these ’rigorous’ compliance checks — low-risk AIs are also up for review. That’s right, those harmless chatbots, the simple predictive text tools that make your lives a tad bit easier are also undergoing scrutiny. The folks in charge promise transparency and accountability— the Holy Grail of the AI industry, it seems. An AI system not being socially responsible? Perish the thought!

The EU’s claim of ushering in a new era of AI regulations is made without blinking an eye at a potential downside. While the intentions might be noble, there’s fear that it could squash innovations. By setting up too many hoop-jumping exercises, new players in the field might be deterred from sprouting at all, leaving the space to established companies that can afford lawyers, lobbyists, and compliance experts.

In the end, tech enthusiasts and developers have been left holding their breath, waiting to see how this pans out. We’re all for controlling AI and ensuring its responsible use, but if the price is innovation, have we really made a step in the right direction? That, dear readers, is food for thought.