Daniela Amodei of Anthropic Thinks AI Safety Could be the Market’s Next Cash Cow

“Anthropic’s Daniela Amodei Believes the Market Will Reward Safe AI”

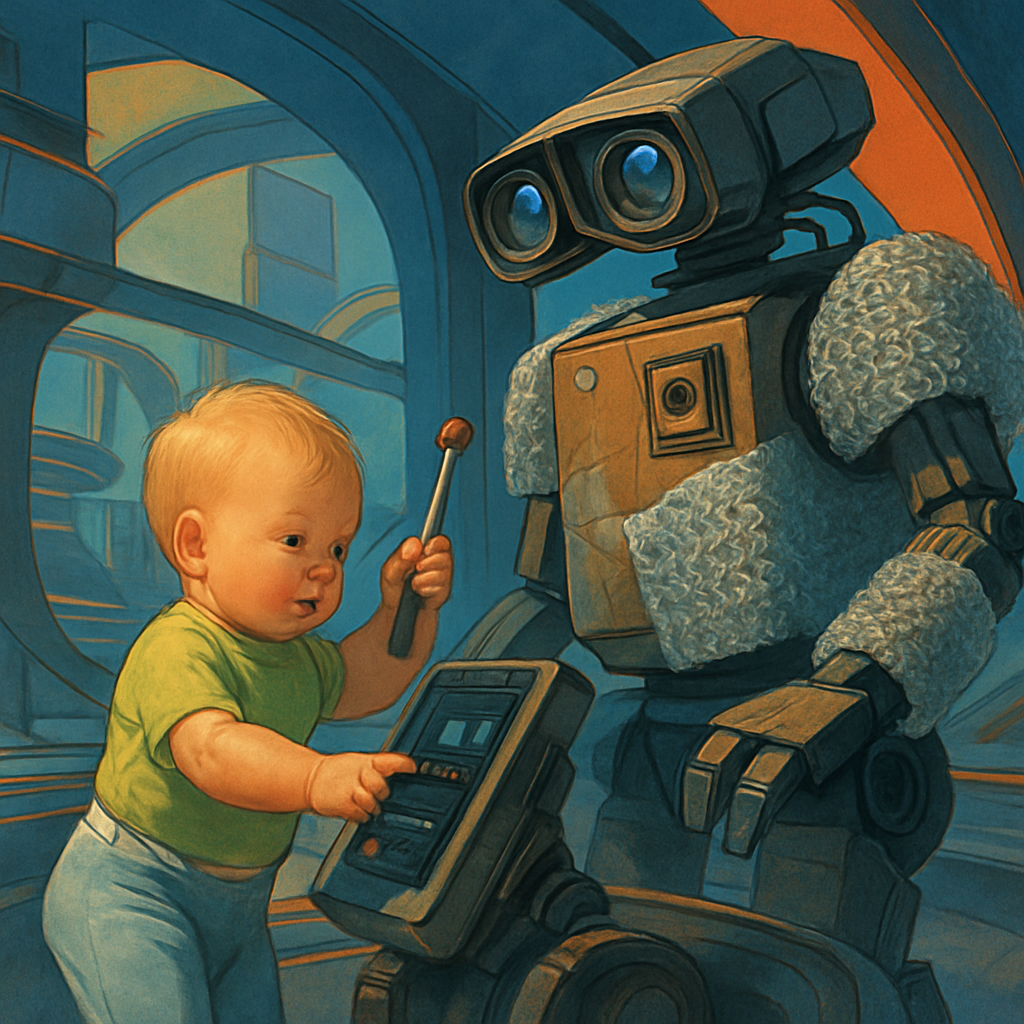

“In the universe of artificial intelligence, open-ended self-supervised learning is a relatively new galaxy. It’s a method of teaching machines by having them learn from large amounts of data, with no specific task in mind.” Well, here we have the crux of both the fascination and the fear surrounding AI. It’s somewhat like handing a toddler a nuclear launch code and then simply stepping back to see what happens, in a veritable ‘babies gone wild’ scenario.

Daniela Amodei, once the co-head of AI at OpenAI, ventures into this unpredictable but promising territory as the chief scientist of Anthropic, an AI safety and research group. Anthropic plays the part of the anxious mother, concerning itself with ensuring that the nuclear code wielding toddler doesn’t lead us into a dystopian techno-saga. Amodei herself states, quite sensibly one might add, that “we’re building machines that learn from data….we want to make sure that we understand what behavior they’re going to learn.” A fair point as nobody really loves surprises, especially not of the potentially apocalyptic AI variety.

Apparently, self-supervised learning – this grand tour du jour of artificial intelligence – is a notoriously challenging process to understand and control. The AI system soaks up tremendous amounts of information, not unlike a high-functioning sponge, yet there’s no manual or pre-set objective. It is, effectively, a maverick with a potential to either liberate society or take it hostage, much like a Wild West cowboy or a rogue superhero.

The major focus at Anthropic isn’t just about developing this particular method of AI learning, but also about safety. Suddenly, the tech world is taking AI ethics and safety into consideration, a refreshing change from the blind technological arms race we’ve been experiencing. If we’re going to bring humanity face-to-face with groundbreaking technology like self-supervised learning AI systems, we shouldn’t forget to install a safety harness.

In conclusion, Anthropic and the visionary at its helm, Amodei, are treading cautiously into the unmarked territory of AI, where a delicate balancing act is ever necessary. May humanity choose wisely and may open-ended self-supervised learning end up more WALL-E than Terminator. After witnessing the AI equivalent of a child running with scissors, it certainly feels comforting to know that someone is out there trying to bubble-wrap the edges. Hats off to this newfound responsible approach to the unfathomable waters of artificial intelligence.