Publishers Flip through the Web in Scuffle Over AI Training Data: A Chapter of Humor and Professionalism

“Publishers Target Common Crawl In Fight Over AI Training Data”

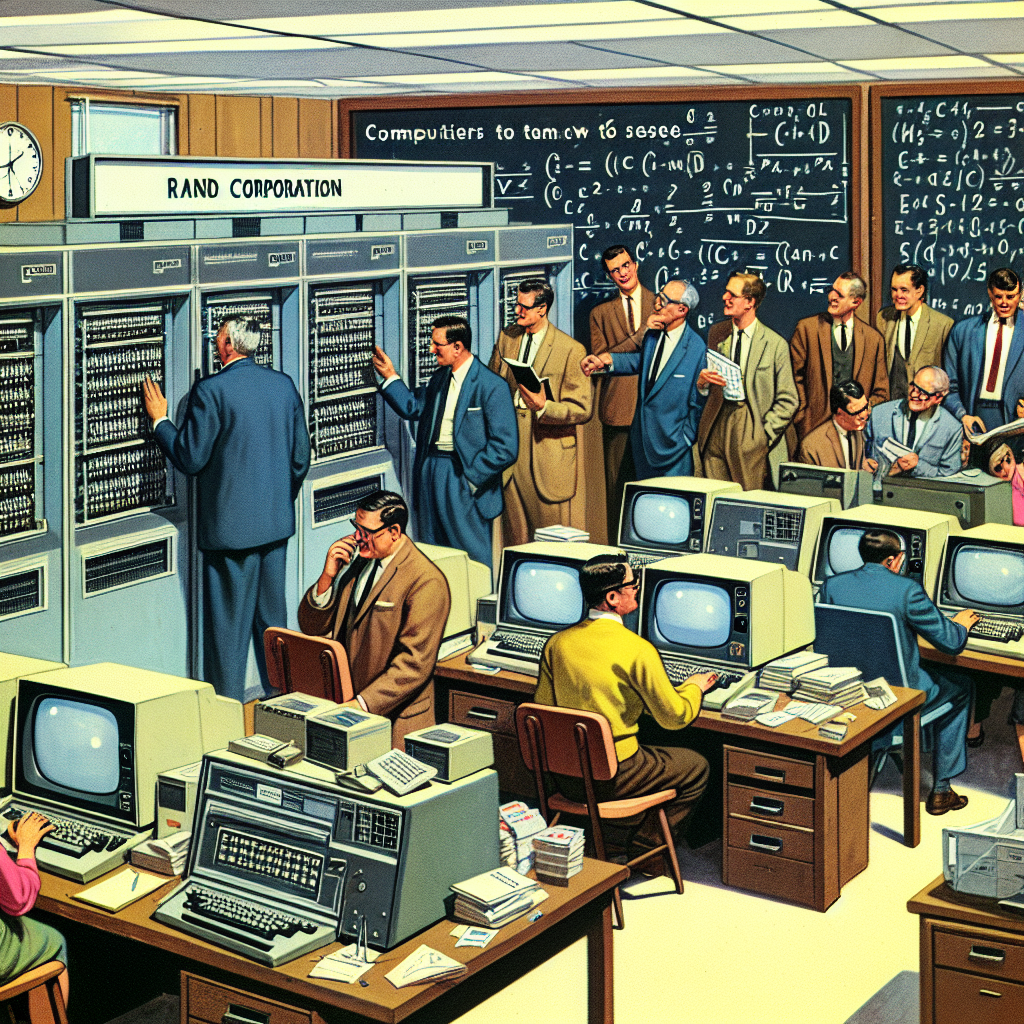

“In 1961, when computers still filled entire rooms and had less processing power than your smartphone, two mathematicians at the RAND Corporation—a Cold War think tank—set out to teach a machine to see. It was a goal shared by a growing number of researchers worldwide who believed that replicating human sight, even approximately, would lead to all sorts of revolutionary technologies.”

Take it back to 1961, when phones where not even a glimmer in Steve Jobs’ eye and computers took up more space than your studio apartment. During this time, a dynamic duo from the RAND Corporation, a Cold War think tank, embarked on a seemingly outrageous endeavour: teaching a computer to see. This audacious idea wasn’t exclusive to them, with researchers worldwide sharing the dream that mimicking human vision would unleash a slew of ground-breaking technologies.

Now fast forward to today, with our ever-smart smartphones capable of facial detection and smart security cameras recognising intruders. Just a blink of the proverbial eye from those humble beginnings, we are living in the future they envisioned. Yet, with these monumental advancements comes a Pandora’s box of ethical dilemmas involving artificial intelligence.

As the article rightly discusses, questions of ethics and AI are as tangled as your headphone cords after a day in your pocket. How do we ensure AI systems are unbiased? How can these systems understand ‘context’ without a human-like comprehension of the world? There’s even some arguing that the current dataset, perceptron, upon which AI is built may need some recalibration or even complete replacement. Quite a headache, considering this is the foundational data for AI learning.

Already there have been cases of algorithmic discrimination like faulty facial recognition systems resulting in innocent people being wrongfully incarcerated. Could it be that we have jumped into the deep end of AI capabilities before we fully understood where the depths lay?

These are not easy questions to answer, and there are no apparent solutions. Scholars, researchers and ethicists worldwide are wrestling with these conundrums. It is becoming evident that as we become more efficient at teaching machines to see, we have to become equally adept at helping them understand the complexities of fairness, equality and justice.

So, the old adage remains true – with great power comes great responsibility. The fight against AI (or rather for morally-grounded AI) rages on, one algorithm at a time. Let’s just hope that we are evolving our ethics and understanding in tandem with technology to ensure we’re harnessing AI’s potential responsibly.