“Artificial Intelligence Dupery Setbacks: A Comedic Tragedy for Voters in the Global South”

“AI-Fakes Detection Is Failing Voters in the Global South”

“AI researchers are starting to acknowledge a persistent gap in their field. It’s a blind spot, one born from a kind of technological unconscious bias. And like most biases, once you know about it, you start to see it everywhere.”

In the world of Artificial Intelligence (AI), it seems there’s an ongoing game of cat and mouse, a battle of wits, if you will. This frank admission from researchers indicates that there’s something that even sophisticated AI hasn’t cracked yet. How charmingly human, isn’t it?

The proverbial blind spot this piece is dwelling upon relates to ‘generative AI’, systems that are trained to produce new content, whether that’s a fresh poem in the style of Rumi or a simulation of a cat video. The widening detection gap is no fairy tale. It is occurring because systems simply can’t tell if the content they’re seeing was created by another AI. It’s the machine equivalent of ‘does he like me, or is he just being nice?’ Absolutely riveting.

Indeed, the flourish of innovation for generative AI models like Deepfakes and GPT-3 has been impressive, to say the least. There’s no denying their creative prowess. But for all their flair and panache, they’re caught in a guessing game.

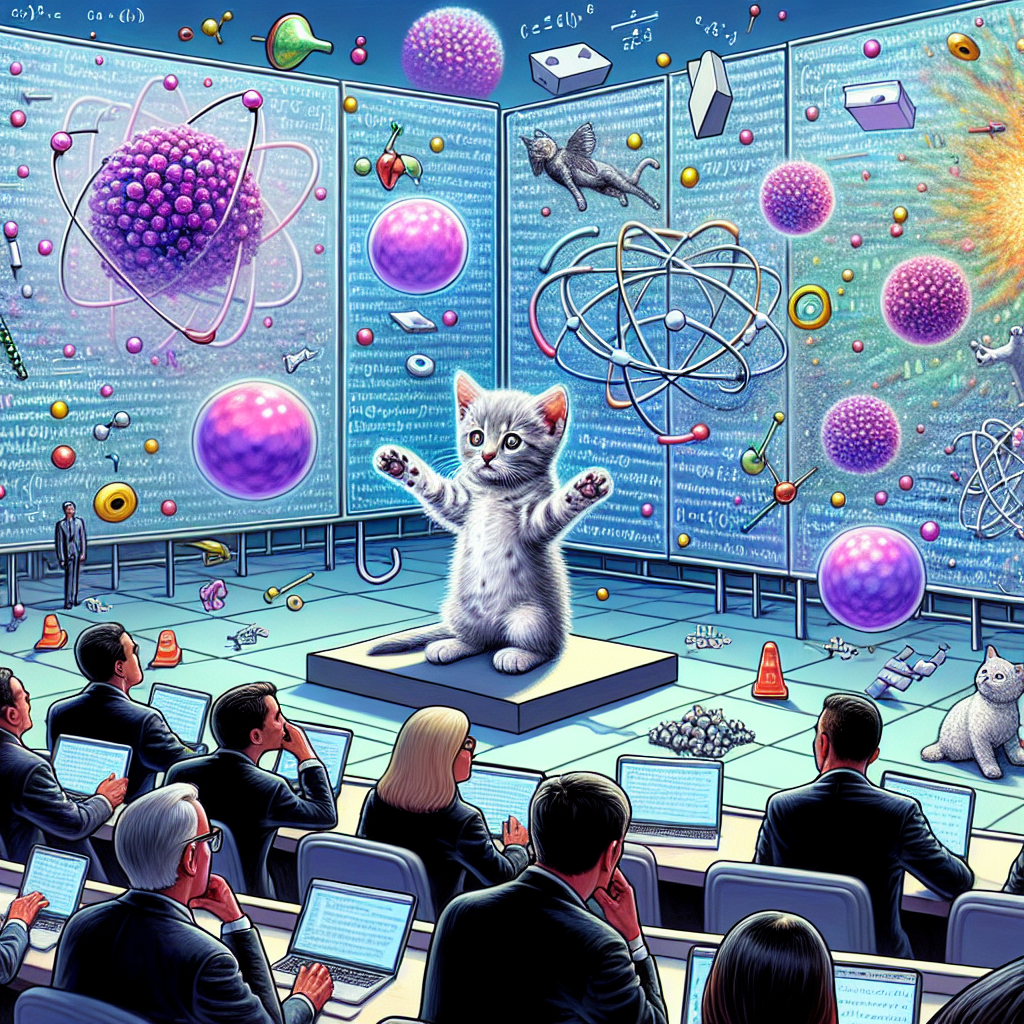

It’s as if these machines possess the collective memory of a goldfish. Train AI to remember that something like a deepfake exists, show it a few examples, and it might be able to catch a glimpse of its own artificial tail. But throw something new in the mix, a different manipulation or a change in expression, and the poor thing is back to square one, as clueless as a lost kitten in a quantum physics convention.

This isn’t all doom and gloom, of course. The article points out that the differential between detection and generation can motivate new research paths and strategies. Some AI researchers are now playing around with what they term ‘differential privacy’, a rather fanciful term that refers to adding a kind of statistical noise to data. This they hope will help to improve AI’s detection. Interesting eh?

So, dear researchers, it’s not all bad. Remember, in the epic game of cat and mouse, sometimes the mouse needs a little head start. Keep innovating, introducing more quirkiness in the AI, and who knows, one day the machine might just crack the detection gap and finally realize it’s been chasing its own tail all along.

Read the original article here: https://www.wired.com/story/generative-ai-detection-gap/