Google’s Star Performer, Gemini AI Model, Hits the Gym for a Major Power Up!

“Google’s Flagship Gemini AI Model Gets a Major Upgrade”

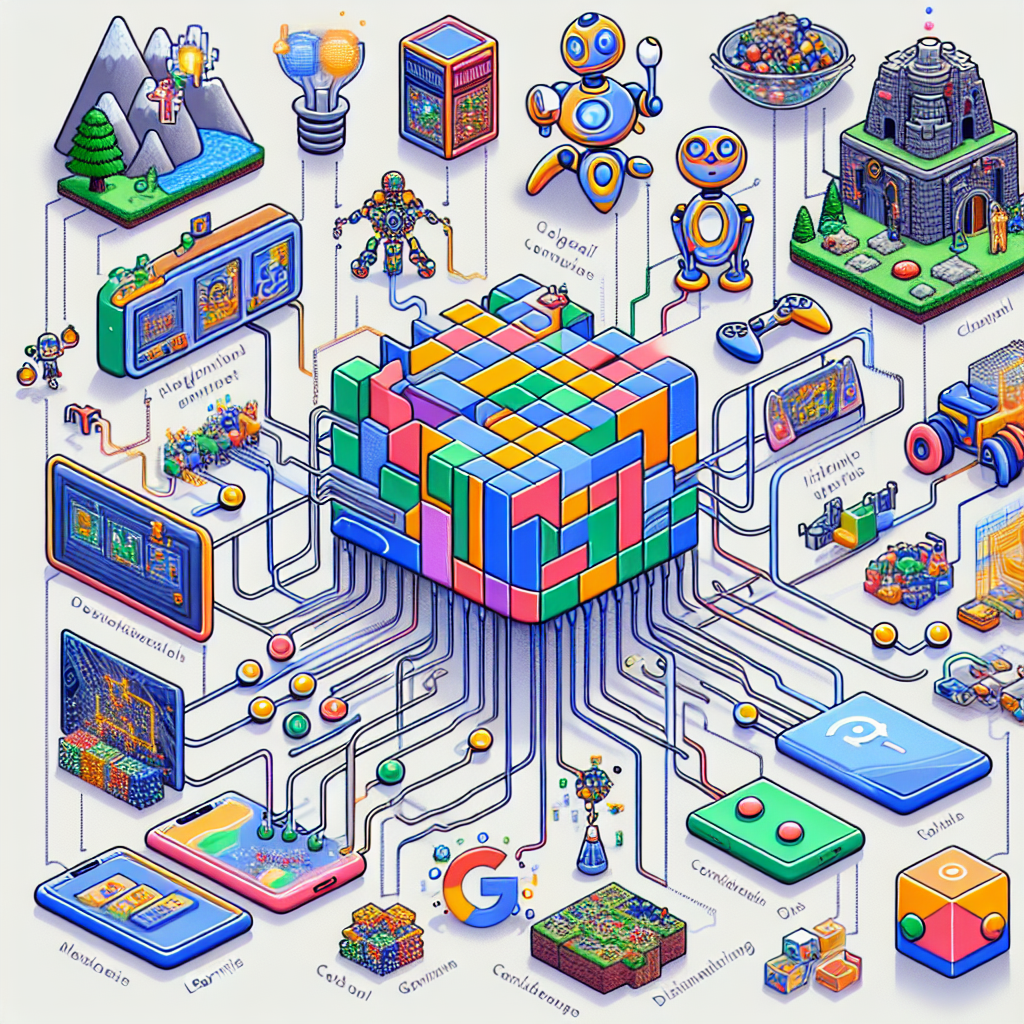

“Google and its London sibling, DeepMind, have published research about how artificial intelligence can be made more adaptable and capable by combining different forms that learned to play video games through repetition and reinforcement. Ideally, such an AI would be able to play any game well without needing additional training.”

Those lazy days of mindlessly crushing candies and flapping angry birds are long gone – thanks to Google and DeepMind. With their wand of machine learning magic, they’re conjuring an AI so spectacularly versatile, it’s left conventional expectations in the dust. The promise? An AI wonder that learns from experience and steps into any gaming battlefield armed with nothing but its wits and will. No extra training? What sorcery is this!

DeepMind is renowned for crafting AI marvels that shine brighter than a supernova in the digital landscape. Take AlphaGo, the charming code string that trounced the world at a multi-century-old board game. Then came AlphaGo Zero, following in its predecessor’s digital footprints yet carrying a torch of its own. Learning gameplay strategies from scratch rather than swotting up on human expertise. Autonomous learning was the crowning glory of the Alpha saga but wait till you meet their dazzling sibling – Gemini.

Gemini, as fitting its astrological twinning, is a perfect blend of varied existing AI learning models – punishing old mistakes, rewarding new strategies, picking the best of many options, and learning from experience to adjust future behavior. It’s no longer about just learning from scratch or over-reliance on human-given data. It’s about growing, improvising, and evolving.

We could talk about how DeepMind’s Approach technique gives Gemini an array of options, and how the Temporal Value Transport (TVT) makes it learn from past mistakes. But let’s keep it simple here, Gemini laughs in the face of losses and bounces back smarter – always.

To put this showboat of an algorithm to the test, Google and DeepMind threw the gallant Gemini into a fighting arena against ‘videogame adversaries’. The result? Gemini achieved 85% of a professional player’s performance, proving that it’s not merely a one-trick pony.

Granting machines the power to learn and adapt on their own simultaneously feels like an exciting step forward and a twisted dystopian fairytale. For now, let’s just hope these smarty pants algorithms stick to their gaming shenanigans. When they find their way into our day-to-day lives, we’d better be ready. After all, there’s nothing more humbling than being outsmarted by a machine that learned to beat you at your own game. Mankind better up its ante, the future of AI gaming is here and it’s captivatingly competitive.

Read the original article here: https://www.wired.com/story/google-deepmind-gemini-pro-ai-upgrade/